Who Cares About Levels?

Let’s say you have a full movie that you edited in Premiere. You watch it in the theatre or a festival. The whole time you’re thinking, this looks really washed out. But you can’t put your finger on why.

Or maybe you’re kicking out your final from Resolve with an Avid codec like DNxHR. You upload it to YouTube and it just doesn’t look quite right. The blacks are really crushed and the whites are blown out.

What gives??

Both of the scenarios above are potential levels issues.

So what are levels?

Like most things in video that are difficult to understand, levels come from the ancient past of video creation. Analog video hardware like tape decks and monitors were set to record and display video levels. Film scanners as well as computer generated graphics on the other hand usually recorded or used full range data.

Nowadays, levels aren’t understood well. The demise of expensive video hardware and the turn towards software has rendered some of the technical concepts of video useless. But these concepts still apply to professional video work.

So why learn about levels at all?

Since software and digital files are so much more ubiquitous now, levels are an important concept to understand especially as a professional. By understanding levels, you can set up a correct signal path in a color bay, render your files correctly with the right color space and encoding to another artist, or even export your film or commercial correctly for a film screening or the internet.

What are levels?

Levels refer to the range of values contained within an image file. Every image and video file is encoded within a specific range of values. At their most basic, there are two main distinctions: full for computer displays and video for video monitors.

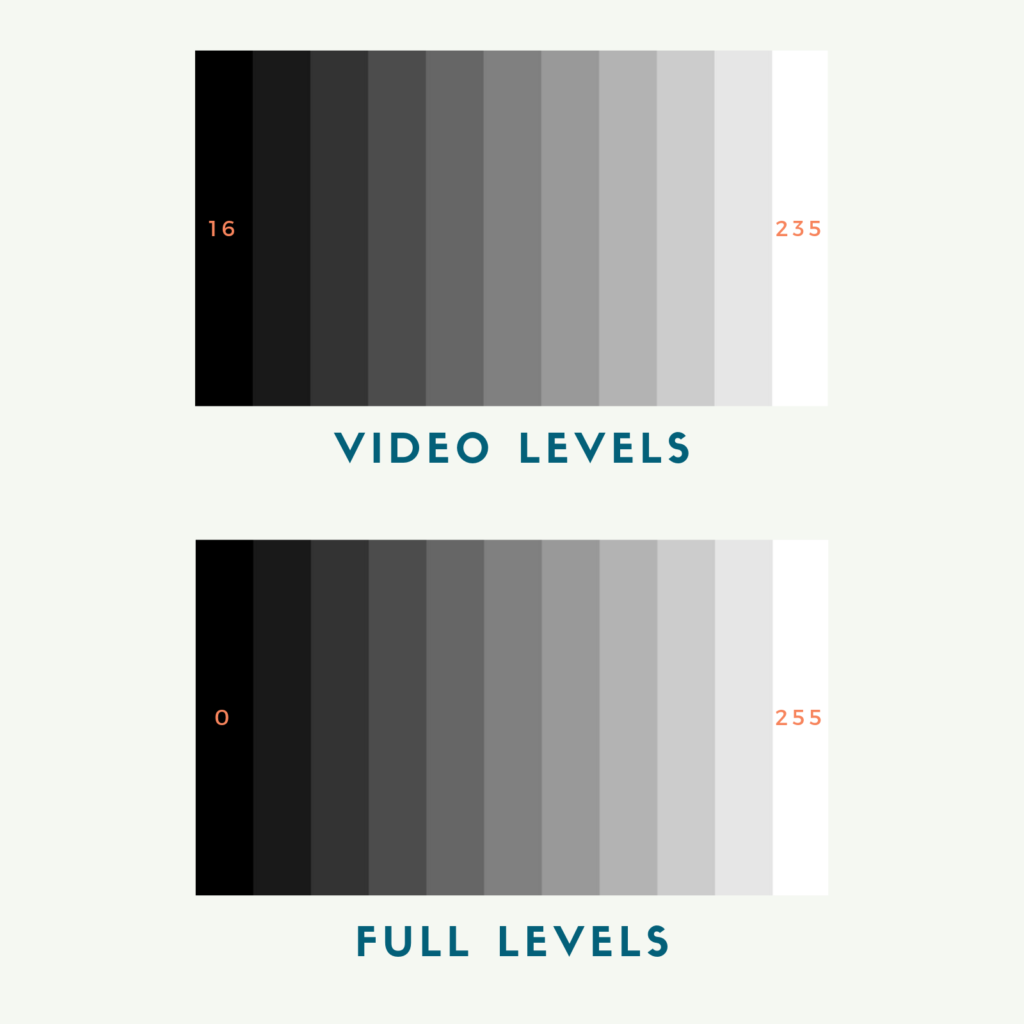

Full level files encode their image data within a full range container. For full range 8-bit files, this means values from 0 to 255, 0 being pure black and 255 being pure white. For 8-bit video range files, this means values from 16-235, 16 being pure black and 235 being pure white.

Many cameras only shoot in video range with values between 16-235. For values beyond video levels, some cameras offer options to capture extended values higher than 235 or lower than 16. These values are sometimes referred to as super brights or super blacks. In file encoding these values can also be referred to as YUV headroom or footroom. We’ll talk more about these files later in the article.

RAW video files on the other hand can be debayered into full or video ranges depending on how the files are interpreted in the software.

All digital graphics are encoded as one or the other. Generally, video files are encoded with video levels and graphics or image sequences are encoded with full levels.

The reason for this is that traditionally video files were watched on video monitors which was designed to display video levels and graphic files were viewed on a computer monitor connected to the output of a computer’s graphics card which is full levels.

Full Levels and Video Levels Terminology

So why is this concept so confusing and convoluted?

Cameras, software, displays, codecs, scopes, etc. each have different ways of describing levels. And sometimes the terminology overlaps with color space terminology. Below are some examples of the different terminology used to describe full and video levels:

WFT!

That’s an insane amount of terminology to describe the same thing.

While the nomenclature is confusing, the basic concept itself isn’t. What is confusing is knowing what levels your files are and how your software is treating them.

Most of this terminology comes from broadcast history. Tapes and files were usually within the legal broadcast range for video which is 16-235. Any values beyond this would be clipped or your file or tape would be flagged during QC for out of range values. This is still the case for delivering broadcast compatible files.

Today, tapes have largely been replaced with files and software.

Software interprets the level designation based on the input information of your files. If you’re working with video files, it can be really tough to understand why your files look different in different pieces of software, why it looks right inside your program, but not when you export it and many other situations.

Levels and Software

Color management has evolved very rapidly in the last few years. With the accessibility of DaVinci Resolve, other software like Nuke, Avid, FCPX, and Flame have opened up options for color management. Levels are an important part of that conversion.

Each piece of software handles color management very differently. Some software works in a full range environment, other software is video range by default. More and more software packages offer options for working in a multitude of color spaces and level designations.

A few examples from post production software:

- Nuke is full-range linear by default

- Avid is rec709 video levels by default but can be changed

- DaVinci Resolve is full-range 32-bit float internally

The most important thing to understand about working with video files within post production software is that values are scaled back and forth between full and video levels based on the software interpretation and project settings.

DaVinci Resolve, for example, which is one of the most flexible programs for color space, works in a full range, 32-bit float ecosystem internally. Any video level files that are imported into Resolve are flagged as video automatically and scaled to a full range values.

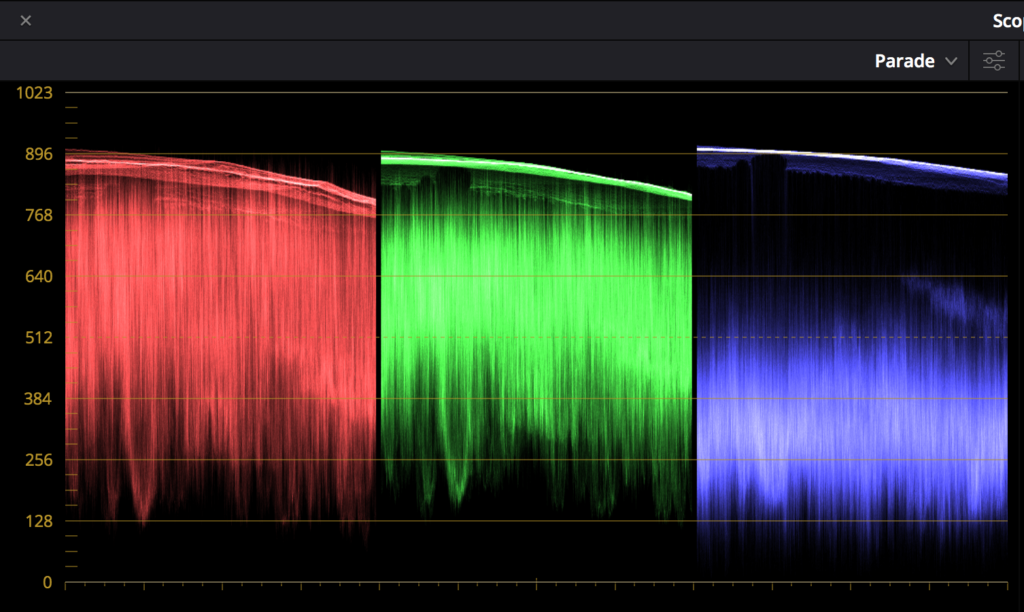

While the processing is 32-bit float, Resolve’s scopes display 10-bit full range values. With 10-bit values, full range is 0-1023 and video range is 64-940.

So Resolve is working full range data internally. If there is a video card attached like the UltraStudio and data levels aren’t selected, Resolve will scale those internal full range values to video range values before sending a video signal to a display monitor.

The software is scaling values back and forth from video to full and back to video. This is an important concept to absorb.

That’s how Resolve works. Avid, on the other hand, has been re-designed with tons of color space options now as well. You can interpret your source files as video or full levels even after you’ve imported the files. And you can pick which type of space you’re working in. You can work in a video range space if that’s how your workflow.

Premiere is a little more limited when it comes to color management. There aren’t a lot of options for re-interpreting your source files into a project color space. You can use some color management settings, but compared with other major NLE’s, Premiere Pro and After Effects are definitely not leading the pack.

Scopes and IRE values

The next thing we need to understand when working with levels is how our scopes work and something called IRE.

Here is a definition of IRE from wikipedia:

An IRE is a unit used in the measurement of composite video signals. A value of 100 IRE is defined to be +714 mV in an analog NTSC video signal. A value of 0 IRE corresponds to the voltage value of 0 mV.

So IRE refers to actual voltage in an analog system. Actual electricity.

Why is an analog measurement like IRE important for the modern age of video?

Post production software still uses IRE values for scopes. If you see a scope with measurement values from 0-100, they are most likely IRE measurements.

How do these IRE values correspond to levels?

With 8-bit encoded files:

Video Levels

1. Black is 0 IRE and 16 in terms of video levels.

2. White is 100 IRE and 235 in terms of video levels

Full Levels

1. Black is 0 IRE and 0 in terms of full levels

2. White is 100 IRE and 255 in terms of full levels

Depending on your editing software, your scopes could correspond to video levels or full levels, but IRE will remain the same.

Not confusing at all right??

This is why levels are so convoluted among other reasons.

If you NLE is running in a Rec709 environment for example, the scopes could be represented as video levels like in Avid for example. You’ll see on either side of an Avid scope 16-235 on one side and 0-100 on the other side. Now you know why. 😉

For a rec709 environment like an Avid project, full level files will be scaled to video levels.

Scopes are relative. While IRE is a bit of an outdated concept, it’s helpful to have a scale from 0-100 to simply describe the video range. For your particular piece of software, it’s important to understand what type of levels environment you’re looking at to understand the scopes.

Encoding Files: Scaling Between Full Levels and Video Levels

When we start to talk about video files, we have to get some more terminology out of the way. This is where we start to hear terms like 4:4:4, 4:2:2, RGB, YUV, and YCbCr. ProRes444 for example or Uncompressed YUV.

In general, RGB refers to digital computer displays and YCbCr refers to digital video displays.

Historically, RGB values are converted to YCbCr values to save space for video bandwidth as it used to be far more costly. We still live within this legacy to a certain extent. RGB is 4:4:4 full range values which means there is no chrome subsampling in the encoding. All that color information is maintained. YCbCr on the other hand is 4:2:2 and video range.

I won’t get into every description of these technical aspects of video encoding. The thing I want to focus on is what your files are doing and how your software is interpreting them based on what the file is. If you’re interested in digging into the history of video and more technical information about the above terms, Charles Poynton is the master of video technology. Here is a link to some his work: http://poynton.ca/Poynton-video-eng.html

There is a misconception that video files must be converted to full range to be viewed properly on a computer display.

This simply isn’t true. Video range files can be display correctly on a computer monitor. What is important is that the software that is playing back your video file, knows it a video range file. Thankfully, most software is designed to know the levels well based on its encoding.

For example, if you’ve exported a ProResHQ quicktime with video levels from Resolve (which Resolve will render by default for ProResHQ,) that file will look correct if you play it back with a program that understands it is a video level file.

For the most part, video software is good at estimating the correct level designation for your video files based on information in your file.

However.

This is where things get tricky.

The line between full range and video range files has become much more muddy with the latest digital codecs.

Quicktime and MXF codecs like DNxHR, ProRes444, Cineform can contain YUV values or RGB values.

While these are great, high quality codecs, they can also be tricky to use in real-life workflows. If you encode a file with video levels as ProRes444, most pieces of software will interpret your file correctly. However, if you encode your file as ProRes444 with full levels or RGB values, most post production software will incorrectly assume your file is video levels and clip values.

2023 UPDATE: It’s come to my attention that there is some confusion on whether ProRes444 and ProRes444XQ can contain true RGB full data levels. According to some commenters and users (see Chris Seeger’s comment below,) it seems that ProRes444 and ProRes444XQ contain YCbCr values only. Since ProRes is a container for whatever it is sent via an application, in theory, you can render full levels to it but it is possible that internally only YCbCr values are stored. I have not confirmed this with Apple personally and for further reading, see this discussion on Lift, Gamma, Gain. As others have stated, it appears that this information is not readily available: https://www.liftgammagain.com/forum/index.php?threads/export-prores4444-as-ycbcr-vs-rgb.8990

In my own testing with rendering from Resolve, Resolve assumes that ProRes444 is video levels and so does Premiere when you import it. Even though, according to the ProRes whitepaper, ProRes444 can encode RGB 4:4:4 data or YCbCr 4:2:2 data (see above 2023 UPDATE for revised information.)

https://www.apple.com/final-cut-pro/docs/Apple_ProRes_White_Paper.pdf

BUT.

Even if you did encode RGB 4:4:4 data to a ProRes444 file, your software would still need to correctly interpret that data and not clamp values. This is why these newer 4:4:4 codecs are so confusing. Premiere in that case would clamp those full range values or assume the file was video range. You still might be able to access those values, but Premiere isn’t seeing them as intended.

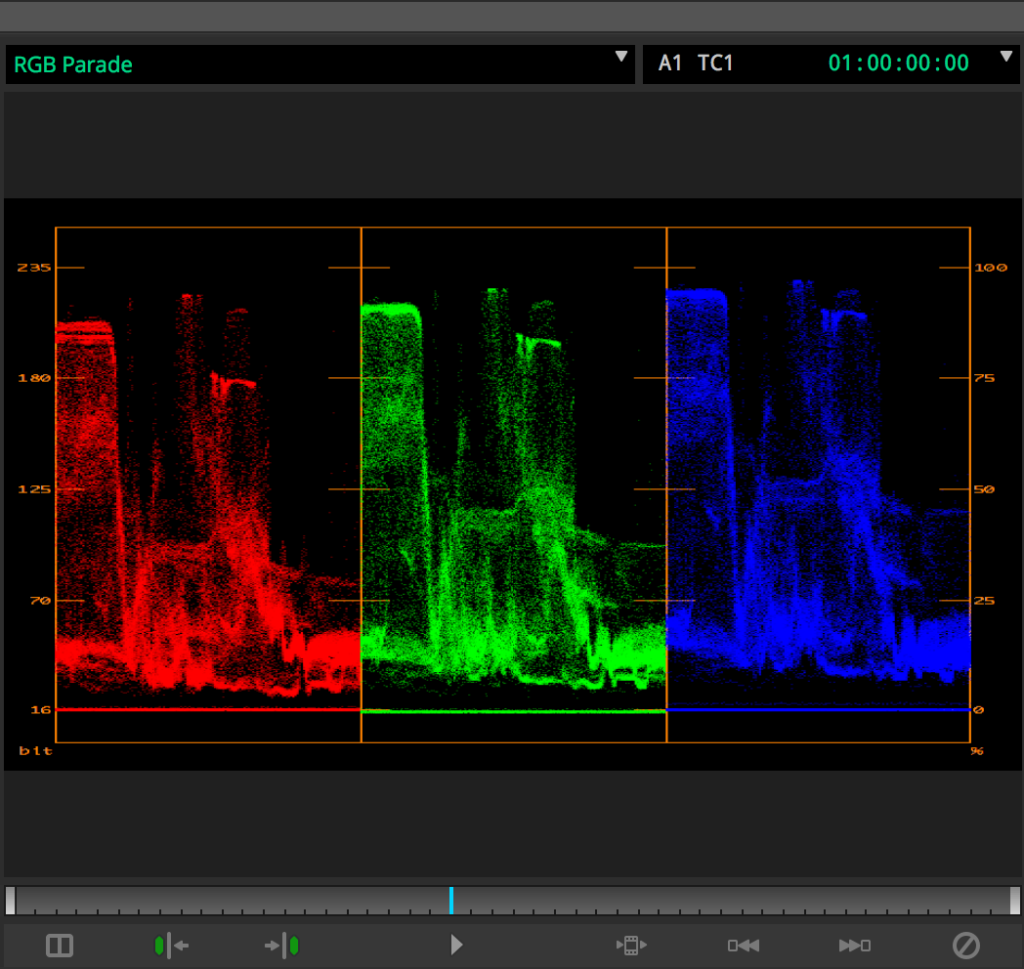

DNxHR 444 is another codec that can confuse software. In my own tests with Resolve and Premiere, a auto level DNxHR 444 file rendered from Resolve will be interpreted as a full level file in Premiere. But Resolve actually encodes it to video levels with auto selected. Therefore the levels will be scaled twice leading to washed out luminance values in Premiere.

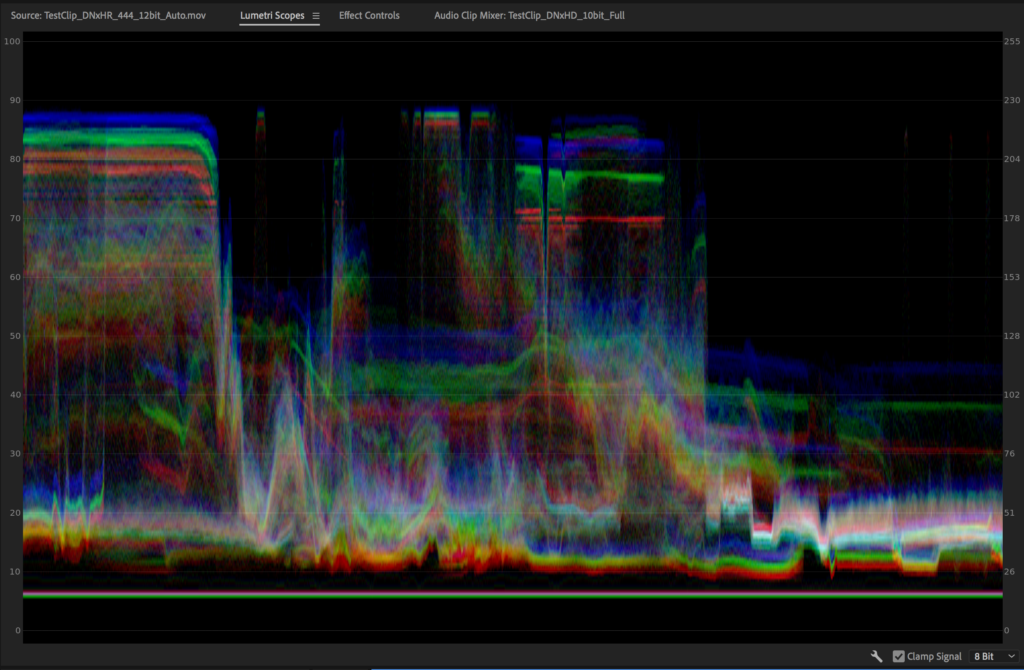

Here’s an example when rendering out a RED RAW clip as DNxHR 444 12-bit from Resolve with Auto Levels in the render options:

Clearly it looks washed out in Premiere Pro. The base line at the bottom of the scope is hovering above 0 at around 16 (not a coincidence.) Premiere expects full levels from the DNxHR 444 file. But on auto levels from Resolve, it actually renders the file out with video levels.

This is actually one great way to tell if you are having an issue with levels. If your black level is clamped on your scopes around 16 (8-bit scopes) or 64 (10-bit scopes,) you probably have incorrect level interpretation going in your software.

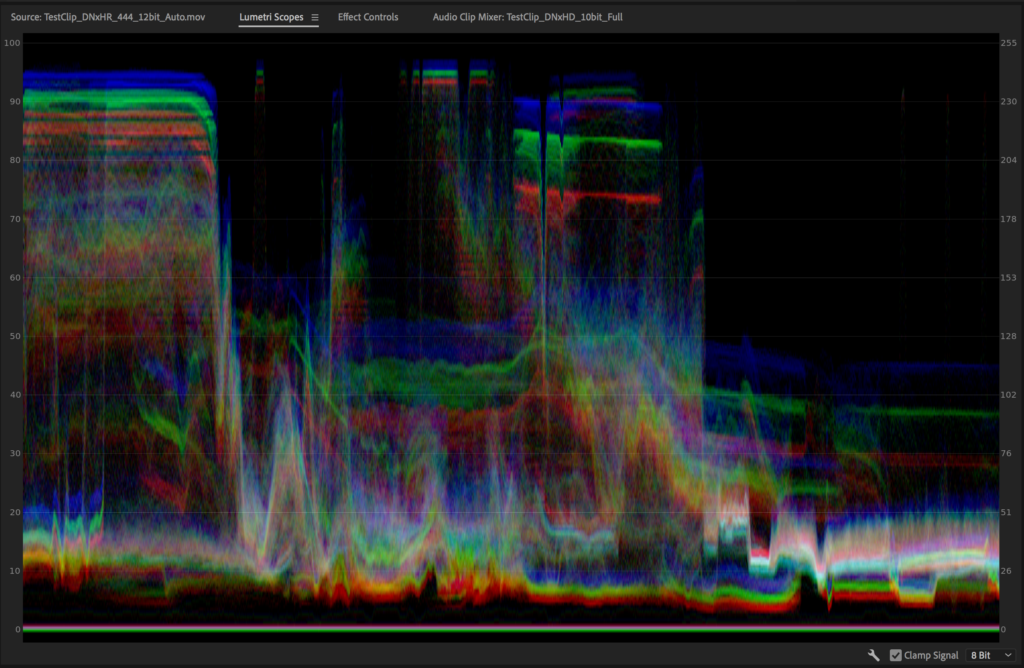

So if we try again but change the data levels setting to Full instead of Auto, let’s see what happens.

In practice, it makes sense that Premiere would assume that a 444 file would be full range. Full range files are 4:4:4. Resolve on the other hand should know that DNxHR should be 4:4:4 when it is rendering it. But it appears that it thinks a DNxHR quicktime should be video range, not full.

Files have levels information embedded within the file headers. This is how the software knows how to scale the levels. Sometimes this information is wrong or incorrect based on which piece of software is interpreting the file which makes things very confusing.

Since there are so many codecs, color spaces and different range values, it would be very time consuming to compare them all. What is most important is that you understand that 4:4:4 codecs can be tricky and it’s important to test out your workflows and file scalings before using them in production.

Especially for Windows users of Resolve and Premiere, it’s important to understand how to use DNx codecs to pass files back and forth properly since ProRes encoding isn’t possible. Check out my other article about picking your NLE here: https://www.thepostprocess.com/2019/02/04/how-to-choose-your-video-editing-software/

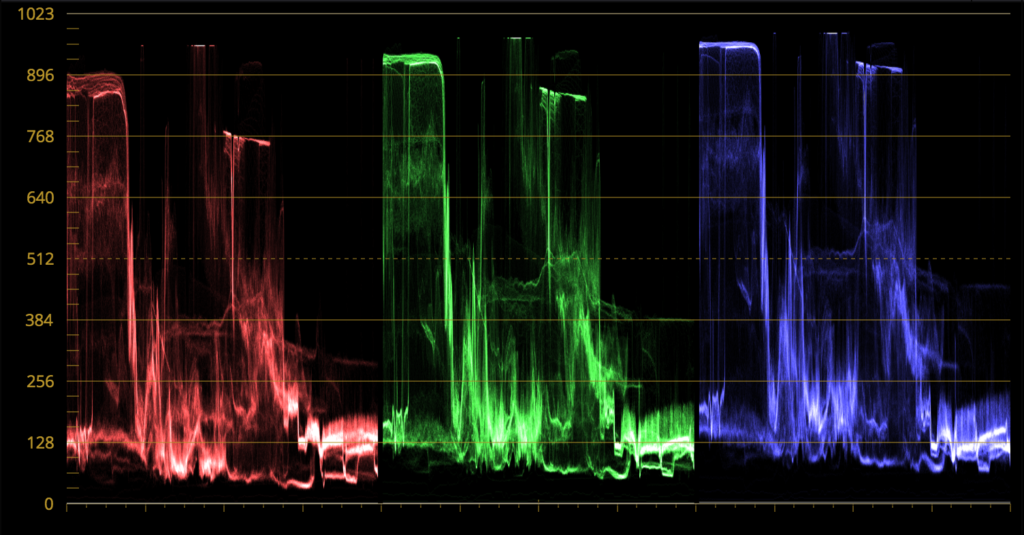

Testing Levels with Color Bars

Generating color bars at the beginning of a shot or program is a great way to test out any issues with codecs or improper levels scaling. Then you’ll always know if what you’re seeing is correct.

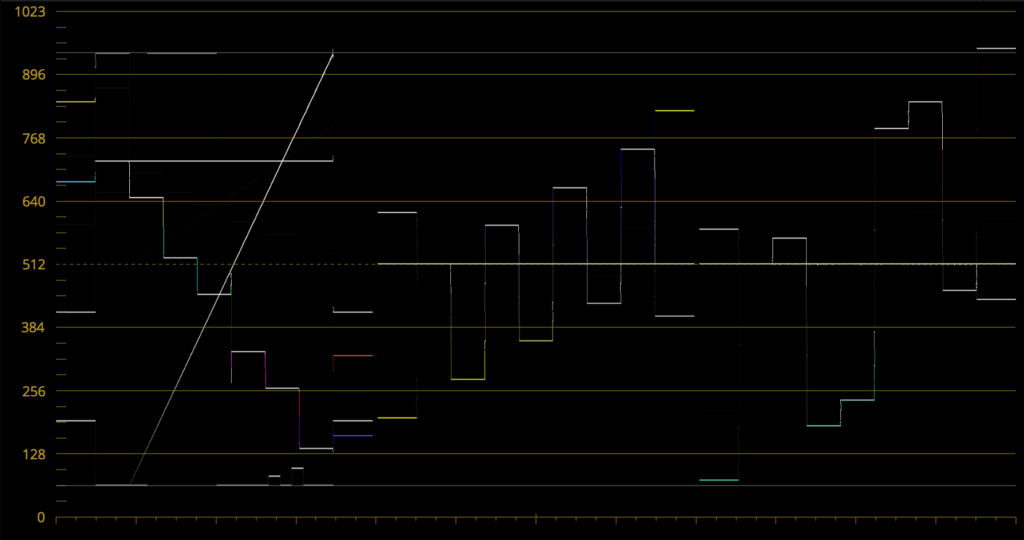

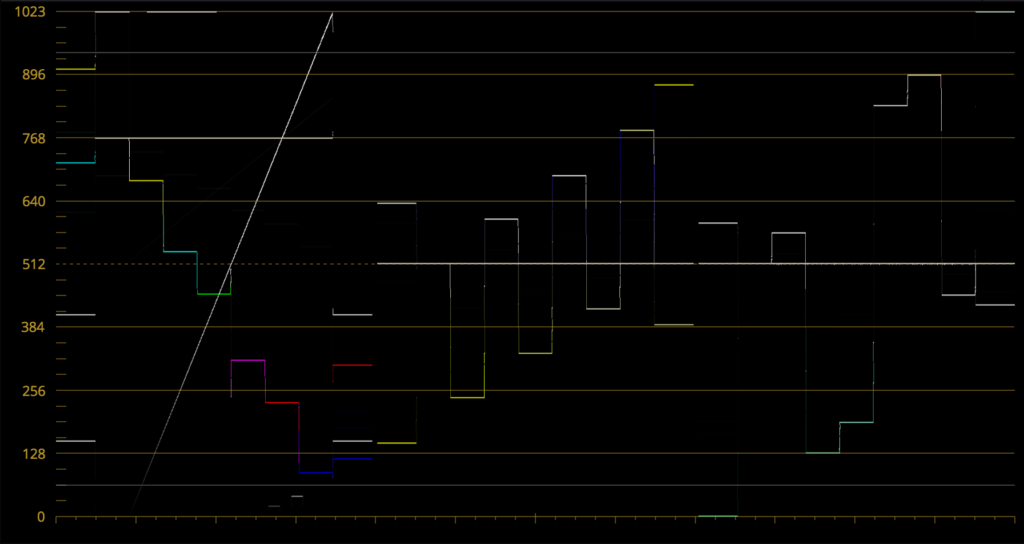

The top example below is being scaled wrong as you can see from the parade scope. This is a video level file being interpreted as full by the software. The levels are being scaled to 64 and 940 which is a tell tale levels scaling issue. You can test this out from any file export and looking at a scope in any NLE.

Full level files being interpreted as video has the opposite issue. The values will go beyond 0 and 1023.

These color bars are correct as the video level values are scaling properly to 0 and 1023.

Working with Hardware and Levels

Understanding levels is key to setting up a proper viewing environment for your content. Even if you’re only making web videos on your computer without a dedicated video card, it’s important to understand the choice that you’re making.

Things are changing quickly with display technology. What is true about signal paths today might change tomorrow. So I’ll talk about the options for signal paths and how levels fit into that.

There are two major schools of thought when it comes to displaying and monitoring your video output:

- Buy a dedicated video card with a dedicated video monitor for any video work (video range signal chain)

- Use the output of a computer’s graphics card to drive a calibrated computer monitor or television (full range signal chain)

For most people doing video work today, it’s important to have a video monitor. Why is this important even if you’re making web videos? A few reasons:

- You need to be able to calibrate your display to match a standard color space

- Computer displays can’t be calibrated to consistently match standards color spaces for video work

- Operating systems and internal graphics cards make it difficult to match video standards anywhere as closely as an external display

- Graphics cards don’t offer the same software integration with timeline resolution and frame rates like a dedicated video card would

- Newer display technology and updated graphics cards may change the reliance on dedicated video gear for monitoring

- Old broadcast video standards of the past could go away with the rise of fully computer based workflows and signal chains

So a video monitor and a dedicated video card is still important. Today at least.

Does this mean we should only build video level signal chains?

Many video monitors today can display full range signals. Video cards can kick out full range signals.

So why should we stick with video range if we can do full?

For a few reasons. Most video codecs and video software still use color spaces based in the video world like rec709. Files like ProResHQ and DNxHD are video codecs with video levels at their core. Many post facilities are based around ProRes or DNx of some kind. Introducing an RGB signal chain into the mix is exciting in theory, but perfecting that setup would require a lot more effort for a small return.

That being said, RGB based workflows are becoming more popular. They might be the standard soon with all computer based workflows.

Usually in higher end workflows, quicktimes aren’t used. The files are most likely full range 10-bit DPX files or 16-bit float OpenEXR files which are much bigger containers than any signal chain or display technology currently available.

For film scanners and projects, full range signal chains are standard. In scenarios like this, it makes sense to build an RGB pipeline to maintain an unconverted, unscaled signal as the way through.

For CG heavy facilities or workflows, full range has its benefits.

For most editorial workflows, sticking with video range systems works the most painlessly for now. As codecs continue to increase in quality, drive speeds continue to go up at cheaper prices, RGB, full range files and hardware might begin to replace more traditional video based signal chains.

Best Practices for Working with Full and Video Levels

Now the big question. How do we use levels on a day to day practical basis?

Here are some practical rules of thumb for working with levels in your post production workflow:

- If you’re using 444 codecs, understand that software might interpret or render them with the wrong scaling. This can lead to your files being clipped or washed out. Test out your workflow with 444 codecs.

- Test out any renders or file exports with color bars to make sure the levels are being interpreted or scaled correctly

- Most cameras shoot video level signals not full. Some of these cameras allow for YUV headroom. Check your settings for your camera to understand how your files are created so that you interpret them correctly in your software and use those out of range values.

- Make sure that your signal path for monitoring is consistent and matches across software and hardware outputs whether it’s video or data levels.

- Files exported for broadcast should be video levels. Most of the time broadcasters require Rec709 ProResHQ 4:2:2 which is video levels.

- Files exported for the internet should be video levels. Most codecs that are used for file delivery are video levels not full. Encoders expect video level files for most delivery formats.

- Exporting files using video levels WILL NOT lead to your files looking washed out. Exporting files using full levels WILL NOT make your files look better or more accurate. Even though your computer display is RGB, video level files will look correct on your screen because players will scale the values correctly.

Conclusion

I hope this article has helped to demystify the concepts of levels in video post production work. There are many misconceptions about levels on the internet. My hope is that this information will help to dispel some of the confusion with levels.

If you know how your software is interpreting your files when it comes to levels, you’ll be able to handle any scaling issues or signal chain mismatches very easily.

Please leave a comment below with any issues or questions regarding my conclusions above. Have a great day.

Other Links for Further Reading on Levels

https://bobpariseau.com/blog/2018/5/2/digital-video-or-lost-in-color-space

Dude, you’re amazing. Thanks for such a detailed and concise explanation.

Armen, thank you for the comment. So glad it helped!

I’ve been battling with this for years, thankyou for the explanations. I’m not going to pretend I understood all of it but I’m going to refresh all my Avid display and project settings in the morning and see how I get on. Are you willing to answer an email or two if I need a little help?! Many thanks again

Of course! Shoot me an email at dan@thepostprocess.com. Thanks for the comment!

I heard blacks for NTSC broadcast is 7.5IRE. does that still apply even if I choose video levels and my blacks are at 0? And if at 0, would that come out correctly on broadcast displays? Thanks a lot for the awesome tutorial.

Hey Mex, that’s a great question. 7.5 IRE is part of an older analog specification for NTSC or standard definition. If you’re staying within a digital system (which is most likely unless you’re using older analog equipment,) you don’t have to worry about 7.5 setup. 7.5 IRE is an analog measurement of voltage that corresponds to 16 (video levels) within an 8-bit digital system. Most displays today are HD and digital so you’d only need to worry about the usual video/data levels. Not 7.5 NTSC if that makes sense.

Thank you so much for those clarifications! I did understand the concept of the different data levels in its basics, but had no idea from where it would come from. Additionally the colorists I know color their work in a range from 64-940 and told me I should do so as well, which confused me even more, because when you do this in video environment all gets washed out. so I guess they were referring to Full Data level environments without specifying.

Finally some more clarity 🙂

Bastian

Thanks for the comment! Most grading software works within a full range space. Some of the confusion is in the signal monitoring. They might be monitoring in video space (most do.) So internally, it’s full range and the video signal out is scaled properly for a video display. Same with an file exports, usually video range unless DPX, etc.

I never leave comments on websites but mate this is such a great article. Color has so much complex information, and a lot of times people who write about it only seem to want to show how much they know. I appreciate the tests and concrete, “how does this affect your workflow?” information. Great stuff.

Thank you for the comment! Man I agree. That was always frustrating to me trying to find answers for the more in depth technical issues. Either I’d get a really abstract explanation or a really shallow, general one. Glad the tests were helpful to you. Working on more now!

I like your content. Where are you from? I mean what nationality are you? May I ask you to contact us so that we can talk?

Please reach out at dan@thepostprocess.com. Thanks!

We are a family run dive centre in Cyprus, we love your content.

Thanks!

Dan,

Wow, what a fantastic post! Just ran across your blog from a Reddit thread. Quick question for you: I’ve recently moved to Resolve on Mac after using Premiere for the past ten years. I’m noticing that my H.264 outputs after coloring from Canon RAW shift in gamma between players (Quicktime and web delivery)…

After reading your post, I guess I assume that I’m going from full range space to video space when exporting? I didn’t have this issue when I would work in Premiere Pro and then export to H.264.

Learned a lot from reading your post. I will be referring back to it as I try to apply it in my own work.

Thanks!

Hi Matt, thanks for the comment! I’m actually working on another blog post to address that very issue which is most likely not a levels issue at all. It’s much more likely that you’re seeing a QuickTime tagging/Color Space issue. Resolve’s viewer is not managed through Apple’s ColorSync and Quicktime is which can lead to a disparity in color and contrast between the two. Stay tuned for the next article!

Looking forward to it! I’ve added your website to my RSS reader so I won’t miss a post.

Perfect!

This is my question, too. How do we deal with the gamma shift between players? Also, how do Vimeo, You Tube and other “recoders” deal with our color spaces and levels? I look forward to your next blogpost. Thank you for so generously sharing your experience.

Thank you for the comment! I’m very close to finishing my next article about those issues.

HOLA , primero que todo felicitarte por el excelente post. Tengo una duda respecto de avid, cuando ya tengo lista la edición de material captado en atomos en 422 apple pro res hq al finalizar la edición y después de retocar el color, quiero hacer el exportado y siempre me quedo mirando en la nomenclatura de exportado lo siguiente: keep as legal range or scale from legal to full range…siempre he exportado a keep as legal range, pero ahora estoy con la blackmagic ursa mini pro en raw y no se si exportar en keep o scale from legal to full range , es que claro al decir full range parece que fuera con toda la información y sigo con la duda. Qué piensas al respecto? saludos cordiales. Gracias por toda la información.

If I understand your question (I copied and pasted into google translate as I don’t speak spanish,) the material was captured on an atomos recorder as ProResHQ? And when you’re exporting after re-touching color, you want to know if you should keep it full or legal? If I have your question right, anytime you’re working with ProRes media, sticking to video or legal across the board is a much better option than full. With full even if your source captures full range values, once you go to ProRes, most applications will interpret it as video or legal values. So unless you’re using a high end application like Nuke or Flame, sticking with legal or video levels with ProRes will just be much easier to manage especially if you’re mastering to ProResHQ.

Thank you for taking the time to explain this! It’s great information but, I don’t think I’ll grasp it unless I can get info specifically for my situation.

Most of what I work with is ProRes422, XAVC from a Sony F5 and H264 from a Panasonic EVA1.

All 10bit, I believe.

Whenever I bring a file into Resolve, I set it to ‘Full, thinking that allows for greater dynamic range to play with in grading. After all, it looks flatter and therefore, more appropriate for grading.

Is this flawed thinking?

If I leave everything at ‘Full’ and grade to an external video monitor (Flanders Scientific) and achieve a good looking image, is this wrong?

Or, should I just leave the interpretation up to Resolve and grade accordingly?

Thanks for the comment Richard.

It’s a common misconception that full levels means more dynamic range than video. Especially from the sources you’ve captured which are most likely video levels from the sounds of it. It’s better to think about the difference holistically. Full and video are two different containers for digital information. In general, you’re not going to see a huge different in dynamic range. Your codec, bit depth, color space, type of encoding, etc. will have far more impact on dynamic range than video levels. Even if it looks flatter, that doesn’t mean you have that much more dynamic range. It’s scaling your file.

When you get into post deciding on full vs. video comes more down to signal chain and how you process your files. Traditionally video monitors mean video signal chain. But Flanders monitors do have the option to switch to full levels as well. The problem is that most programs treat video based files like QuickTimes as video levels most of the time. So you might run into issues with how QuickTime interprets your file or having to manually scale to full and back. An all full workflow is definitely possible, but there isn’t much of a reason for it unless your whole pipeline is designed for it.

Thank you for pointing out how color management is handled differently in different software. I think people assume that all editing software is the same. I hope they come to realize that they are all different and it is important to learn how to manage colors when you use each one.

Thanks for the comment Franklin. It’s true. It would certainly be great if there was more visibility within each software app to see how levels are being interpreted, color spaces, etc. I think as display technologies evolve and more content is generated for the internet, we’ll start to see this subject evolve.

I’m.get used to write very deep articles. But man you nailed it as a king. Congratulations! This is amazing!

Even me with more than 20 years on this market I got confused about this subject lots of times, your explain is perfect! Thank you!

Andre, thank you so much! Really glad the levels article helped you. It’s one of those subjects that I’ve seen so much confusion in understanding so I’m happy that it’s clearing things up for you.

Hey Dan! This article is amazing! I’ve been surfing on Internet for like ages to find good explenation of levels! Thank you so much!

I’ve got question or two. My NLE is Premiere Pro but I’ll be switching to Davinci very soon.

I capture video on GH5 + Ninja V at Vlog-L ProRes HQ/422/LT 10 bit 0-1023 (Vlog can’t be changed to 64-940). I deliver my videos for Internet – yt/fb/insta/webs etc. You are saying Files exported for the internet should be video levels. So for my understanding if I have files recorded on Full levels (Log format forced me to that) I should interprate them as Full range in my NLE and stick to 64-940 scopes during color process and not cross them to 0-1023? Also I always convert log format to Rec709 so is that automatically switch to video levels? What should I do with my Full range files and how to color them correctly? Im kinda confused there, sorry.

Other than that, I heard that files recorded on External Recorders like Ninja V are interpretaded by PP as video levels even if they were shot on full levels so I am forced to switch levels by mysylef. Did you hear about that? Is it true? And how it looks with Davinci?

The other thing is my monitor. I have benq bl2711u which allows me to 10 bit signal with full range. All my work is about delivery for Internet (so video levels) but my files are full levels (log formats) so should I set my Benq to full or video levels?

The answers for my questions are in article probably but I’m not native speaker and I had troubles to understand everything clear even with google translator.

Thank you in advance!

Hi Karol, thanks for the comment. Each NLE works with levels differently. Resolve scales video files to full internally. If you’re grading full level files, I’d stick with Resolve and manually tell it that your file is full levels. You can also manually scale full level files to video range in Premiere if you want to. Most likely Premiere is interpreting any QuickTime file as video levels anyways. Most delivery formats to the internet are expecting video levels which is why I recommend rendering to video levels if the file will live on the internet. Hope that helps!

Hi Dan, thank you for all. I have a question that is driving me crazy: I work with Davinci Resolve 16 and Hp Dreamcolor Z31x via Blackmagic 4K card. When I work with Video Signal I leave Video in preference Data Level, and I toggle “Use video levels (64-940)” in the HP. When I work with 444 source I toggle Full in Data level on Davinci and should I even toggle off Use video levels in Hp? Or just leave Video levels in Davinci and only set the videolevels on monitor? In substance: should I toggle both (on mac and hp) or just in the monitor?

Thnx

Hi Sebastiano. Thanks for the comment. The signal settings should match in Resolve and in the monitor. So if your monitor is set to full, Resolve should be too. Keep in mind that a 444 source might just reference to the chroma sampling not necessarily a full range encoded file. Some 444 sources are YUV which is generally video levels also. In general, unless you’re working with cinema type workflows in a full range pipeline involving full range RGB dpx files, video levels usually makes more sense and simplifies the process. Hope that helps.

Thank you so much. Three years after writing this article is still a gem to many of us.

Like Sebastiana, I am working on a HP DreamColor as well (still going strong this monitor) and will put this advice in to practice.

Also you mentioned something from a Decklink card vs. the mini-monitor. I’m now using the mini-monitor setup. Is it wiser to use a dedicated card? The mini-monitor does not ‘work around’ your GPU?

Thanks again Dan!

Thank you Jeroen! I’m glad it’s been helpful to the community. The decklink or the mini-monitor will work. The only real difference is that the decklink lives inside a computer and connects directly to an internal card and the mini-monitor lives externally and connects via thunderbolt. They both do the same essential thing and both ‘work around’ your GPU. Each has different specs and capacities for resolution, type of inputs and outputs, etc. but their function is the same. Hope that helps!

Hey Dan,

I’m going to start referring everyone to this article. I work in VFX, and this is such an important concept for round-tripping to clients and between vendors, but no one seems to understand it. So much early feedback in reviews is about “washed-out looking quicktimes,” and it makes me want to scream.

Often edits come in with the wrong data header, or even with the right header but incorrect baked-in levels, which causes issues all the way through to delivery. Often it is confused with a gamma shift issue; googling the issue produces endless articles on how to “cheat” the problem to get an acceptable result, which isn’t, in my mind, what professionals should strive for.

Thank you for describing this so eloquently.

Totally agree Mike and thanks for the comment. The “gamma shift” issue isn’t well understood either and it’s based on outdated displays. In this the case, Occam’s razor applies. With QuickTime, keep it video levels with 1-1-1 tags unless you know what you’re doing and you’ll usually avoid shifting issues. There are exceptions of course. Glad the article helped!

Hi Dan, Thanks for this article. It is the only article that explain the problems with Full vs Video levels moving from Resolve to Premiere and back. I wanted to ask you one question. I work in Resolve and After Effects. I have some color bars from Light Illusion that I use as a reference to determine if my levels signal path is correct. I have my Eizo monitor properly calibrated to REC 709 2.4 gamma. The problem is that when I import those color bars to After Effects and export them. The color bars look I just exported from After Effects look washed off in Resolve with clear lifted blacks. I have changed clip attributes in Resolve from Full to Video levels and they do not show correctly. I have also played around with the Color Management feature of After Effects but the problem remains.

Thanks,

Alan

Any help would be greatly welcome!

Hi Alan, thanks for the comment. Which file type are importing into AE? Which file type are you exporting from AE? In general, Resolve assumes levels automatically based on the format coming in. A quick way to test this would be to import the same file into Resolve and AE and drill down into where the shift is being introduced. Hope that helps!

Thanks for your reply Dan!! It is a Blacks & Bars TIFF calibration image from Light Illusion set of calibration images.

I spent some time playing with it back and forth between AE and Resolve. It turns out that AE by default interprets any 422 video codec as “Video” levels, and it also interprets any 444 codec as “Full”. And this is regardless of how these files were exported from Resolve, “Auto” “Video” or “Full”.

So, for 422 files there are no major issues because 422 files mostly need to be interpreted as “Video” levels. But if you need to export a 444 file from Resolve with “Video” levels, the best option is to export it would be to select “Auto” (resolve with actually mark it as “Video”) or “Video”.

And then, once imported in After Effects, it will show lifted blacks because AE will interpret it as “Full” due to the 444. The solution is to apply an effect called “Levels- video to computer” to the layer in the composition. And then it will go back to normal.

Best,

Alan

Are you talking about ProRes444? I’ve noticed with Adobe in general that it interprets DNx444 codecs as full, but ProRes444 as video as I’ve written about in other articles. I’ve never had issues round tripping ProRes444 and keeping it video across the board with Adobe. As far as interpretation, it really depends on the information written in to each specific file type and codec. The other thing in AE is whether or not color management is on. It’s a great thing to use bars to test how your workflow is converting at least. Glad you found a solution!

Hi, thank you for the great article! Can you help clear up 16-255 (Not 16-235 or 0-255, but 16-255) for me? If you shoot a video in 16-255, all of the values above 235 are clipped. As I understand it, these values above 235 are called super whites. The information is still there but you have to bring the levels down in Premiere or another NLE so they aren’t clipped. However, if you just play back a 16-255 video on your computer or upload it to youtube, all values above 235 are clipped on playback.

What I don’t understand is what is this for? If you shoot a scene twice, once in 16-235 and once in 16-255. If you bring the levels down for the 16-255 version, the scene will look identical. There is no increase in dynamic range or detail. It also doesn’t make any sense to me because if you just want more bits of information, why do 16-255 instead of 0-255? Here’s an article I found with pictures to help illustrate what I’m talking about: https://web.archive.org/web/20141029150901/http://blog.josephmoore.name/2014/10/29/the-three-most-misunderstood-gh4-settings/

Anyway, if you could help clear this up for me, I would be eternally grateful! I cannot seem to find any information about this on the internet and it’s driving me crazy. Thank you very much!

Hi Robby, thanks for the comment. It seems to me the reason for recording to 255 would be to preserve those code values above 235. While 235 might appear to scale those values within range, there’s most likely some scaling numerically happening to bring them in range. It’s a hard thing to quantify without running more precise tests, but I’ll bet each camera manufacturer has a specific reason that they offer that extra recording range. In the past, some software would just clip super white values, but most modern codecs and software can scale those values in range. Hope that helps.

first thanks a lot for this explain,

if we have 4K Prores444XQ project exported from Davinci as LOgC DPX (Auto range) to grading it on Luster as full range and re-exported from Luster (gradedRec709) as DPX Full range.

now the question i want to do the finale export (audio/subtitle/generique) from premier ?

how can i be sure premier is reading the right range exported from Lustre (should i do color bar test like you mentioned)

or should i convince the client to do the final from Davinci (and make it auto range.because davinci is smart to detecte the right range).

second question: in this workflow can i replace the DPX choice with Prores444XQ.

thanks a lot , and all my respect

Thank you for the comment Hasan. Always love to hear people using Lustre. I graded on Lustre for a few years as well. To answer your question, yes I would definitely kick out color bars from Lustre to make sure Premiere is interpreting your full range DPX sequence correctly. If you go the DPX route, I would definitely recommend doing the final from DaVinci. Resolve was built for DPX workflows and is much more flexible with them than Premiere. When exporting your DPX from Premiere as well, you have much less control over tagging and how that DPX sequence is translated to another format. You could also go the ProRes444XQ route from Lustre which would for sure make it easier to use Premiere. QuickTime workflows are much easier in Premiere in my experience. What format does it need to be after exporting from Premiere? I’m assuming you’re working in the feature world with that kind of workflow.

Few notes.

Wrong bit:

“Even though, according to the ProRes whitepaper, ProRes444 can encode RGB 4:4:4 data or YCbCr 4:2:2 data.”

ProRes is always YUV internally. Any RGB data (eg. 16bit RGB) send to ProRes encoder is always converted to YUV (as 4:4:4, not 4:2:2 as this would be pointless, with max. of 12bit precision) through its internal processing. If you think otherwise read Apple’s white-paper carefully. It uses some wording which may suggest that data can be stored as RGB, but it’s always YUV (it’s just that encoder can take natively RGB based pixel formats as input and also present RGB on output). ProRes by its spec should be always limited levels and this is why there are problems with full rang files. Apple has not intended ProRes to be full range and there is no dedicated header in ProRes frames to specify range (there is one in eg. DNxHD/R for example). This still doesn’t stop files to be exported as full range ProRes, but then there is NOTHING in ProRes data to tell that file is full range. If container doesn’t have such an info either (eg. MOV has no standard range flag, but MXF does if I’m correct), then apps simply can’t know how to treat such a file and only user manual interpretation (user has to know if file is full or limited range) allows for proper handling. This is why any full range ProRes exported file should be treated with caution and may behave differently depending on the app. Manual interpretation may be needed, so people should always keep it in mind.

DNxHR ( also Cineform) is slightly different. Not only it has 2 separate modes: YUV and RGB (so you can actually store input RGB data without any conversion), it also has more flags in its frame structure and allows for range specification: full vs limited (there is even way to flag actual range which can be different than standard eg. 64-940). If apps are bugs free and read DNxHR files properly then automatic levels detection should work fine. Resolve use to have bug in setting proper range in DNxHR headers during export, but it was reported and fixed (although BM keeps introducing same bugs in later versions). Resolve allows for manual interpretation of range, so this way you can import full range ProRes (or any other) files, but most likely with manual intervention. Premiere doesn’t, so things most likely will go bad with full range ProRes files in Premiere.

As mentioned in the article- Resolve always works in full range internally (so scopes etc). No one should ever try to limit grading to 64-940 range because eg. later it will be exported for broadcast which meant to be typically limited levels. This is wrong thinking, but looks like there are people doing it. Want pure black, grade to 0 in scopes. If codec will be YUV Resolve will scale this internally to correct value during export.

This brings to another point. Source files, processing, monitoring doesn’t have to go through the same range setting. You can work with limited levels files, but monitor over full range. Same with export. All those elements are independent and it’s up to app to be properly handle needed conversions.

If we think about ProRes or DNxHR/Cineform or any other intermediate codec as a high quality master/working format then they should be actually RGB based end always full levels. This would make things easier (and we would not waste time for YUVRGB conversion which keeps happening in any app/monitoring chain etc. sometimes actually many times). Limited levels concept is old and not needed these days. It’s a leftover from analog era.

YUV for other hand is a different story. It’s needed for heavily compressed codecs as it allows to encode luma and chroma info differently, which is not possible with RGB. RGB is compression unfriendly way of storing image data due to luma been linked to chroma. This is probably why ProRes is YUV always internally (it lets it be more efficient). Thing is that those intermediate codecs are high bitrate, so we could move to RGB (like DNXHR does for 444 profile) and compensate any loss compensate by rising bitrate. YUV can be kept for h264/5 etc.

YUV can be limited/full range and there is no really a rule that 444 must be full range and 422 limited (they can be any combination). It all should be just flagged properly and then no guessing would be needed. Problem is that we have such a big problem with metadata/flags- we put way not enough attention to it, just blindly focusing on actual video data.

Please also stop using IRE – this is so outdated and irrelevant measure (measure for composite signal) in digital era 🙂

Thanks for your in depth comment Andrzej. You’ve pointed out a lot of good things to note with using QuickTime based workflows and full range. I agree with you, the unfortunate part of dealing with levels in real world scenarios is that they aren’t treated like other pieces of embedded metadata and it’s very difficult for the average user to know what’s happening under the hood of the files. Many places and facilities use full swing ProRes444 QTs. So many things about working with video are based on obsolete technologies. I totally agree with you. The problem is the real world implementation of all these things. It would be great if we could wipe the slate clean and start over with a new digital landscape. Maybe one day as technologies evolve we will get there. From the days of tape, we no longer are limited by resolution for example, but still need to deal with a lot of other archaic things. Appreciate the comment and your precise explanation of things that were slightly unclear or a little off with the article.

Thank you for all this great information. I believe this is the issue I am having.

The colorist sent me a 4K DnxHr 444 in a .mov wrapper and when I open it in Premiere or Resolve the color is washed out. When I play it in VLC it looks like its supposed to. I’m not sure where to go from here. Is there a way to make either program interpret the video correctly? Do I need to ask the colorist for another render?

Hi Jay, thanks for the comment. It’s tough for me to know what you’re looking at without seeing it. The first thing I would do is have your colorist render out color bars and check them in Resolve and Premiere. That will tell you if you have a levels issue or a color tagging issue. If it’s a levels issue, you can change the levels interpretation in Resolve. If it’s a color tag issue, that is a bit more complex. Another you can do is change your display profile to Rec709-a and see if everything matches. If it does, then you have a display profile/color tagging issue. I have later articles on the site that explain color tagging to check out. Hope that helps!

If it’s 444 then should be rather full, but it’s not a rule (more like expectation). We have to establish what is the actual data in file and also what headers say (match data or lie).

Load file to Resolve, open scopes and then toggle levels between full and limited (in clip attribute settings always pressing OK after change). Watch scopes- this way you can establish if file is full or limited range. Then check which settings matches Auto to establish if file is flagged correctly:

Full range= auto and full look the same

Limited range= auto and limited look the same

Resolve 17b7 by default exports 444 modes (10 and 12bit) as limited range (maybe slightly against prediction), but it does it properly: actual data and flags are limited range. If other app relies on flagging it will import it correctly as we have proper match. You can force Resolve to Full in export settings and then it will export full range data and properly flag file. So all good here. To be precise- I’m talking about MXF container. MOV needs separate verification.

When it comes to Premiere (latest version 14.7 build 23). From my test it interprets all files as limited range. Any file exported as full range (which is really full range) will look dark in Premiere and there is no way to overwrite it as Premiere has no levels settings like Resolve. You simply have to play with correction and stretch it back.

Looks like I messed up my last test. Premiere (14.7 build 23 Mac) behaves the same for MOV and MXF, so ignore previous info about MXF (for Premiere).

In short:

Resolve does everything as expected, it sets/reads flagging properly. One maybe not the best behaviour is that with auto setting 444 exports are limited range. I think more expected/correct would be full range (specially when files are RGB based).

Premiere is hard coded: 422 always expects limited range and 444 always full range.

For exports: MXF does the same as for import. All files will import properly to Resolve by default.

For MOV there is a bug. 422 exports are limited range, but flagged as full. Such a files in Resolve will look washed out, so you need to manually overwrite to limited range.

Hey there! This is a great insight on the topic, altough I still have some confusion on a couple of things that I would be thankful if you could clear up 🙂 So I dont own a fancy dedicated video monitor, I just have a humble, budget monitor. On the Nvidia control panel I can switch up between limited and full rgb (limited was the default setting). So from what I understand I should turn this setting to ‘full’, work normally on resolve and then export on ‘limited’ for stuff like web and tv broadcast content?

Hi Tomas. Are you using a blackmagic video card to send the signal to your monitor? Or is it directly connected via your computer’s graphics card?

Rule is simple. Whatever you set in GPU you have to duplicate in monitor. Typically HDMI link will be flagged and monitor follows sender settings automatically (full or limited is not that important here- both should work). About all good monitors have Auto, Limited, Full levels (also display info about incoming signal) and allow to use manual setting if things not working correctly in auto mode. If your monitor has no such a ability then it’s just a fancy monitor, but not a good one 🙂

Hello Dan,

thank you for the post, helps me clarify the struggle between this giant decision. Still my mind is playing arround and can´t define how to set it up.

So for what I understand. To get the most of the materia meaning any high end camera leave settings to full range. But if we are doing some deliveries say for dcp and for stream the render options should be change For any work delivered to internet values to video and for a control environment is full., (I just colored a documentary and monitored all the time as video output and the final for the dcp was DNxHD 422 10-Bit set to video values, and it looked the same in a movie theater) If I instead had colored the movie in a full range output should the render be different? .

I have a custom build pc with a decklink 4k giving signal to a lg nanocell calibrated rec709 video monitor.

so i´ve beeing playing with sony material in gamut3.slog3 and using full levels to expand de scale but the final version goes to internet, so the monitoring data should be set to video? or just the export? By checking in premiere does this means that premiere from nature playbacks videos in the color space and levels as the streams?

Sorry to bother with many questions

Thank again for the post and any advice on this questions will be of great help.

good day

saludos,

Hi Ricardo. To be honest, I think the simplest solution is the best. My rule of thumb is to only use a full range pipeline if the entire chain is setup for full range including delivery. For 99 percent of work out there, video range works just fine. Anytime you’re using video files like ProRes or DNxHD, you’re in a video world in general. As long as you match your viewing environment to how it will be seen, you’ll be fine. Resolve works internally at full range anyways, so it’s only getting scaled down during monitoring or output. Full range workflows are also done at higher levels generally using DPX files or EXRs and really the reasons for this are just to maintain that full bit values throughout the exchange of files in and out of Resolve. But if you’re just in Resolve and making deliverables either video range or full range, unless you absolutely need full range for a deliverable, it seems more straightforward to me to use video levels in the whole chain.

Hello Dan, terrific information, glad I found your website. Question regarding GPU color range setting for PPro and After Effects… I’m getting so many conflicting statements, I hope you can set it straight.

My computer monitor is calibrated (x-rite calibration) at rec.709, gamma 2.4 I’m using color management in PPro and After Effects, which forces both to use my calibration icc profile.

So… what setting do I use for the GPU ? 0-255 full or 16-235 limited? The final project is for broadcast, (bluray disc). Currently, my gpu is at 16-235 but my final export to disc, using h.264 bluray preset in Adobe Media Encoder, is giving me a final result that looks like a gamma shift. The whole hdtv image is a bit bright like the gamma was tweaked. (hdtv is also calibrated). The footage looks fine on both timelines, but not on the hdtv. Is my GPU range wrong? Some say PPro needs full range, but others do not. Very confused. Thanks for you advice.

Hi Letty, thanks for the comment. If you’re making a blu-ray for a TV, it’s important to have an external calibrated video monitor to see your images while working in Premiere. Calibrating computer monitors doesn’t always get you close to a television calibration because you’re dealing with ICC profiles and a lack of in depth control along with any issues with your signal chain. Computer displays are full range and your OS has a color profile that might not match that video standard, there are many factors that play into why there could be a potential gamma shift. Ideally, your whole chain would be video levels for a blu-ray. TVs today and blu-ray players can display full range, but it’s trickier to encode and set them up at that way.

Thank you for your advice. Turns out my monitor is connected with hdmi cables and the gpu is treating it as an hdtv. HDTV is 16-235 color range, so I’m setting my gpu to match that prior to monitor calibration. Not sure until I test more, but if I remove color management from PPro and AE, the OS calibrated color profile of rec709 will be the default and I should be able to color grade and make a disc that will match my timeline. Does that sound about right to you? Thanks for your time and thoughts on the matter.

Thank you. I was afraid of that. So I have to buy an external monitor that is calibrated rec709 and gamma 2.4 to color grade for a bluray disc. Even though my monitor is an hdtv monitor hooked up via hdmi cables? I thought that would be equal to an external because it’s 16-235 and I think my gpu is treating like an hdtv because of the hdmi cable. ??? Thoughts on that or must I buy an external $$$ monitor to color grade for bluray?

So disappointed that I can’t make a decent looking bluray in PPro with my set up.

Can you recommend a monitor I should be using? I guess my hdtv LG ultrawide LED isn’t going to cut it? Something to color grade in PPro where the timeline will match the bluray and play great on hdtv. Thanks!

A lot of people use a calibrated LG CX with a Blackmagic card. Or you could just buy a Blackmagic mini monitor and go direct into your TV if you just want it to look right on your particular TV. You can’t escape your OS if you’re going directly out of a GPU to a TV unfortunately. By using a dedicated video card, you can go around the OS color profiles. In a perfect world of computer/TV, it should work yes, but there is still a large gap between the two which is why most broadcast work is still done on dedicated video displays.

Thanks again.

With help from the Adobe forum, turns out I can simply calibrated my monitor to rec709 2.2 (not 2.4) & 100cd/m Now this lowers the gamma, (makes it a little brighter) then I adjust levels and color grade, export using H.264 bluray, burn the disc and PRESTO! The HDTV matches the timeline in PPro. Simple work around and highly effective. I can use a LUT to convert the 2.2 back to 2.4 if needed. Of course this work flow assumes using regular SD footage, (It should work with HD, but I’m not sure HD users are having problems making bluray) GPU at 16-235, HD monitor w/hdmi cable is also at 16-235. It’s great now to get what I see on the timeline on the HDTV big screen and no need for $$$ monitors and graphic cards. Hope this helps somebody out there.

Glad you found something that works!

Hi Dan,

very nice explanation. Thank you for taking the time to explain all of this.

Are you familiar with DVS clipster? I am under the impression that when interpreting ProRes 4444 in clipster the levels are not always correct. For instance a ProRes 4444 which looks correct in video level or auto on resolve, is then listed by clipster metadata reader as full range. This generates a lot of confusion. Have you had any experience with this?

Yes I know Clipster, but I haven’t used it myself. That’s one of the issues with 444 codecs in general. Apps interpret them differently. Flame, for example, had an issue with properly exporting the correct levels with ProRes444. I think they’ve fixed it since. The best way to test is to use bars and check them in Clipster with a scope if it has scopes in the software. That’ll tell you pretty quick what’s going on levels wise.

Hey Mat,

Check which version of Clipster you are using as I am most certain only the latest version handles HR levels correctly. It also gives you the option to export a FullRange or Limited version of HR where the older version does not.

Hope this helps.

Hi Mat,

I use Clipster everyday and I confirm it interprets ProRes 444 as FULL range. If you want more infos about full/head ranges in Clipster, do not hesitate to read its great software manual (chapter parameter – encoding ranges).

See you,

Patrick (from Paris, France)

PS: Thanks a lot Dan for your amazing article!!!

Thanks for checking it out Patrick!

I found this extremly useful! Thank you so much for breaking this down

Glad it was helpful!

Thank you ! This was very very useful !!

If I may ask: If I understood well : if In resolve if the master settings are set to video levels, I should deliver the video set to video level too ?

How to deal with timelines containing Raw and not Raw footages ? I should work in video level that’s right ?

And one last questions : What about working in video levels and having a monitor plugged on a mini monitor so, sending 444 SDI output ? Am i right if I say that in this case the monitor will still display video levels, cause Davinci is set so.

Thanks again for your work

Hi David. In the master settings, the level setting is for external signal monitoring, not what’s happening in the application. Inside Resolve, everything is full range. Yes if your project settings are video levels, Resolve will send that signal out to the monitor. As long as your monitor is set to video levels, that will work fine. If it’s set to full levels, there will be a mismatch. Hope that helps.

Hi Dan,

To add another layer of complexity, HDR standard has adopted Full Range, However, Dolby Vision is recommending for HLG delivery to export ProRes 422HQ, which by default it’s interpreted by most player and NLE, including Resolve as Video Level.

Ha true! These color management issues don’t seem like they’ll get any easier anytime soon! This seems to be a helpful resource regarding dolby HDR and ProRes: https://professionalsupport.dolby.com/s/article/Dolby-Vision-Legal-Range-workflows-for-home-distribution?language=en_US

Dan – Thanks a lot for the helpful article on encoding levels.

In the most recent Apple’s ProRes White Paper, January 2020, I haven’t been able to fine reference about levels — Video or Full range — being part of the properties of Apple ProRes. specifications. Could you please redirect me to the right source?

Hey Willian. I think the reason why it’s not included is because it isn’t specified as either. Which means you can encode or decode ProRes as full or video. Most software by default interprets ProRes as video so it’s important to understand your pipeline before exporting or importing.

Thank you so much Dan. I had the issue of too dark blacks after rendering graded videos out of davinci resolve. I worked in REC709 (scene) and rendered with Full Levels as I assumed it would turn out in “better quality”.

Now after your genious article about levels I figured out to export those videos with video data levels set, and voi`la looking as graded and displayed within Davinci.

As I am quite new in this topic I have to give you a BIG COMPLIMENT because YOUR explanation of this complicated topic is so easy to understand and on top of that sympathetic and funny.

I really enjoyed it!

Thank you!! Glad you enjoyed it and thanks for the comment!

Excuse my bad english

There is another Level problem that you don’t talk about. Almost all the Rec.709 video cameras shot un the xvYCC color space (a Sony spec) or x.v.Color by Panasonic. This is especialy the case if you set your zebra to 109 IRE, which is recommended by Sony to not waste bits. The range for Y is 16-255 and there is very low restriction on the levels of U and V. This signal produces many color values that don’t fit in RGB because above 255 (1023 in 10 bit). 1 or 2 colors can be far above (not the 3 at the same time).Many TVs can display this signal without clipping. Many people think that this is is not possible because 8 bit can only hold values between 0 and 255. But they ignore that the colors levels represented by UV have nothing to see with the color level encoded in RGB, it’s endeed not the same color level scale. For ex.: before the conversion from 16-235 to 0-255, YUV 255,128,128 is RGB 255,255,255. Now, if U and/or V is not 128, then you have the max luminance but colored, which gives 1 or 2 RGB values above 255, thus out bond. If you put a clip with a bright strong exposed red flower in Resolve when all the data levels is set to full, you have an exact conversion from YUV to RGB and a lot of datas are above 1023 in the RGB Parade (lower the gain with a node to see it) but all the datas are far under 1023 in the YUV Parade. The above 1023 datas datas are just the YUV levels that can not fit in RGB and can go to level 360 (1440 in 10 bit) whith many cameras, and this is not visible on the histogram of the camera because he shows only the Y level!! There are also levels under 64, which are colors under 0 in RGB when you convert from 16-235 to 0-255 (also used to increase the gamut). Resolve don’t lose anything at import time but well at export time: all the levels above 1023 are clipped if you export in RGB (except in float RGB) or in YUV, event with the “do not clip” boxes checked in the deliver page. So, never use Resolve to transcode a clip in another YUV codec because you are clipping all your beautifull bright colors. The only solution is to export at a reduced gain (so that there is nothing above 1023) in a 10 bit YUV codec (to reduce banding artifacts). On the other hand, Edius works only in YUV. Nothing is never losed. The YUV values that are on the timeline are output as is to the output YUV codec, there is never a degradation. It is very important to preserve all the levels in a Master before downgrading it to the very bad broadcast qualty! Furthermore, a non degraded original can easily be incorporated in a log HDR workflow where it can fit without clipping the levels that are out of bound for Broadcast Rec.709. All the YUV codecs can encode the full color space of YUV. It’s always bad designed softwares that cause problems. For ex.: After Effects hard clips your YUV at import time to the 16-235 range and expand this to 0-255, which is catastrophal because a not recoverable loss. Resolve has not this big issue but clips at export time. So, i wrote softwares to convert YUV to 16bit RGB with a gain reduction. Now i can edit my clips at lower brightness in almost al those (bad) softwares without losing datas. I output my work in 16 bit RGB and convert it back to the original gain in YUV with my other software. This is fastidious but it’s the only solution.

I am waiting for your reactions

Hi Drand, thanks for the comment. The level of detail here is great and I think that you’re right, software is many ways has limited legacy rec709 style ways of working that clip or clamp values. That being said, how technical and into the weeds we want to get about this level of precision is debatable. Like many things in post, it comes down to your priorities and what’s needed at the end of the day for a particular project. From an engineering perspective, what you’re saying makes total sense and explains many of the issues that people will run into and not understand why. From an artist/producer point of view, this kind of precision might be less important for their timelines or projects. Either way, thank you for contributing and really love your depth of knowledge in this area.

Dan, great writeup! I’m working in Resolve and have an amazing grade that I can’t seem to translate over to Vimeo. I’m working with ACEScct color science (the source material is film) and monitoring in Rec709 gamma 2.4.

I tried just changing the tags to Rec709 and Rec709a at render as you suggested… even tried some of these solutions… but still can’t nail the richness and contrast of my grade. https://www.youtube.com/watch?v=1QlnhlO6Gu8&t=1377s&ab_channel=LELABODEJAY

any quicktime that has a 1-1-1 tag exported from my project is compromised in some way.

Thanks David, I appreciate the kind words. Color management is a different ballgame than non-color management. Especially without an external grading monitor. You really need a grading monitor with ACEC in Resolve in my opinion especially if you’re using project wide color management. The tricky thing with ACEScct in Resolve, you can’t change your timeline color space since it’s ACES. So it’s tricky to know what you’re monitoring in Resolve’s viewer. In non-color managed environments, you can change that timeline color space setting which will match the tags used for the Mac display setting so you can be confident the viewer matches the output. This is why a lot of people don’t use project wide color management, they’ll do it at the node level so there is more user control over the transforms and viewing options. What I’d recommend if you have the time is switching your project to non-color managed, use CSTs or ACES transforms to match what your color managed project was doing, use a timeline color space like rec709-a and tweak the image from there to get it back to what you were seeing. Hope that helps!

ProRes 4444 and 4444XQ are both YCbCr internally and therefore always Video Range internally. The ProRes Decoder SDK can decode to RGB Full Range, but the video essence internally is ALWAYS YCbCr. This has been confirmed with Apple. There were many devices and software several years ago that misinterpreted video ranges for ProRes 4444, but updates have fixed them (Cloister, Resolve, etc).

Can you fix the explanation for ProRes 4444 as it seems to indicate that ProRes stores RGB video essence.(which it doesn’t).

Thanks Chris. I appreciate your insight on full range encoding with ProRes444. I’ll update the article and tag you.

Hi, Can, thanks a lot for this excellent article and I’m glad to see you’re still answering questions! I have two for you, if you don’t mind, which I’ve been unable to find a clear answer to.

1) If there is a mismatch between full vs legal levels baked-in somewhere in a post-production workflow (for instance, a camera file source with data levels is incorrectly imported and transcoded in an NLE as legal), is the original data compromised? In other words, if a new media file is created using the incorrect levels and is then reinterpreted without re-ingesting, has the quality been compromised?

2) My understanding is that YUV is an analog format and that YCbCr is digital, yet YUV still seems to be a common reference in the digital domain. Is the term just being misused or are some digital files still encoded in YUV?

Hi Doug, thanks for the comment. In general, there isn’t a huge quality difference between full and legal levels, it’s more of an overarching type of code encoding that is beneficial for particular pipelines, workflows and deliverables. It’s not like downgrading bit-depth or clamping quality by choosing a lower quality codec for example. The biggest issue with full and legal is just misinterpretation more than quality loss. Yes you are correct, YUV is analog and YCbCr is digital. Sometimes they are used interchangeably but technically anything digital is not YUV, it’s YCbCr. They are similar in that they are describing similar things. Hope that helps.

This article nails the importance of blending full lessons and video materials. It’s the recipe for an engaging and effective learning experience.Dealing with levels can be challenging, but this post’s suggestions on using both full and video content make it much easier.

Thanks for the comment!

Hi Dan,

Thank for the detailed document on this hard topic.

I revised Apple ProRes family codec, and I couldn’t find any information on ProRes RGB being data level, and YCbCr containing video level.

Is there a link where to confirm this information?

Hi Willian, I haven’t been able to confirm that information either and updated the article with my findings. There is some conflicting information and wording around RGB encodings with Apple ProRes444. The white paper led me to believe that ProRes can be encoded with full level RGB data. Generally RGB encoding would be full levels as the two usually go hand in hand. Other readers have pointed out that ProRes is YCbCr only even with 444 encodings which makes more sense. This has led to confusion even among software developers as some applications incorrectly interpreted ProRes444 as a full level file even though it might have been video level YCbCr. 444 doesn’t assume RGB, YUV can also be 444. It’s confusing. Check out the update.

Just dropping a comment to say thank you. Great article that just solved my issue!

Awesome, glad it helped! Thanks for the comment.

Aloha, Dan. Amazing article and I’ll be keeping it and sharing it in my summary about full and video levels. It’s been quite a journey, trying to understand it all. But, your article has been fantastic at making it clear.

There are still many questions I have, as I work with Final Cut Pro X, which doesn’t have any option to tag files as full or video. I just have to hope the NLE is scaling when appropriate, and while working with both full and video range footage. I’m guessing FCPX is doing the right thing, because Invisor seems to show the various types of footage to have full or video tags.

Still, I’m concerned that LUTs I’m using to convert S-Log3 (full range) and D-Log (video range) are working correctly in FCPX. I think I’m going to have to start figuring out how to use color bars to check, but I don’t have a clue how to use them to test whether my NLE is interpreting various camera’s Log signals as full or video.

Hi Sky. Programs like Final Cut and Premiere don’t usually provide specific options to interpret levels like Resolve or Flame do. In general, using scopes can help as values will looked clipped or pulled in if interpreted incorrectly. If you’re working with ProRes files, by default those will be interpreted as video anyways. Often there is room to access the full range of a camera file anyways even within a video range world with superwhites for example. So don’t worry too much! If you start to see clipping or particular issues, it’s worth investigating but in general, you should be good in FCPX with camera files.

Excuse my bad English.

Almost all video cameras record luminance (Y) in the 16-255 or 64-1023 (0-109 IRE) range in an YUV codec. The Y and U (CbCr) signals give a color to this luminance so that the color levels can go outside the RGB 0-1 space, i.e. RGB values smaller than 0 or larger than 1024 which very often appear on the Resolve RGB parade scopes but not in the YUV parade. These out of bond levels are correctly displayed on compatible xvYCC or X.V.Color TVs (like Sony and Panasonic). These levels are clipped when converting to an RGB codec which is not float. The only method to convert YUV video to another YUV codec is to use an NLE whose core works in YUV and never converts to RGB (for example Grass Valley Edius if you do not use a LUT filter). In Resolve, if you set your entire workflow and Clip Attributes in Full Level, you will see the real levels of the video on the scopes. Anything above 1023 will be clipped if you don’t export to a float codec. To see what is above 1023 in the RGB space, look at the RGB Parade scope and decrease the gain. Resolve does not clip anything on import thanks to its 32 bit float core but you should know that you will clip intense colors (i.e. strongly exposed red flower) if you export in integer RGB or YUV (the fact that it is impossible to export the original signal in an YUV codec is a serious flaw of Resolve).

Now, if you import your YUV clip into After Effects, it’s a disaster. Anything below 16 or above 235 is definitively clipped at import time because de 16-235 to 0-255 automatic conversion, with no possibility of recovery by reducing the gain of the video in this program like in Resolve. The disaster is even greater if you import Full Level YUV because the blacks will now also be heavily clipped. The only solution is to put your clip in Full Level in Resolve, adjust the gain so that nothing is above 1023 in the RGB Parade scopes and export in Full Level 16 bit RGB (i.e. a TIF). After Effects will not do the 16-235 to 0-255 conversion on RGB still images. If your video black level was 16, put a temporary adjustment Layer above your clip with a Levels filter Input White at 16 to adjust black for your computer monitor. When you import back into Resolve, you will apply the reverse gain to return to the original level.

Another info: After Effects and Photoshop set to 16 bit work in 15 bit. Internally, the levels range from 0 to 32768, not 0 to 65535. All your outputs are in 15 bit précision but encapsuled i.e. in a 16 bit codec with truncated to the nearest byte values.

Thank you for the comment. This is great, deep information about RGB and YUV. Adobe in general makes levels issues fairly opaque and like you said does things under the hood that most users are not aware of. I prefer Resolve due to its flexibility, but as you say it’s not perfect. Most of these flaws have been in the software for a long time from the days of tape decks. I appreciate your insights!

Hi Dan!

Thanks for this great article! And excuse for my “still trying to impove English skills”

I have a question about the Level Things.

If all my source/footage is from 3D renders say arnold.. the output is 16 bit EXR file.

When I do comp or grading in Nuke(you mentioned it actully in 32bit full range worksapce)

Then I choose to export a clip in ProRes422(limited range, right?)

Finally, there are some players play the clip with correct metadata being parsed.

What I get should be exactly the same in Nuke Viewer’s right?

An internal scale like full – limited – full, won’t effect any thing visually?

I assume the software will do the magic work correctlly? if I tag the output video metadata right?

Hi Brandon, thanks for the comment. That’s generally right yes. Most applications handle the correct scaling between levels especially applications like Nuke. To fully test out your workflow, I’d suggest trying to use bars and scopes to see if there is any scaling happening in the viewers or in the outputs. Typically where you run into issues is when taking full levels type files into applications built around video levels like an editorial program for example. With Nuke, I would assume that it is smart enough to know if its a file that is encoded with full levels or video based on the file type. That’s how most programs know how to interpret levels based on file type so it can be tricky if there is a file type that is typically encoded video levels but has been manually adjusted or encoded to full levels for example. I hope that helps.